Deploying a modern, multi-container application in a Docker Swarm environment can be both exciting and challenging. In this post, we'll walk through a sample Docker Compose file that deploys the Hoarder application along with its necessary dependencies. This setup includes a headless Chrome service for browser tasks and a Meilisearch service for fast, efficient search capabilities—all orchestrated via Docker Swarm with NFS-backed persistent storage.

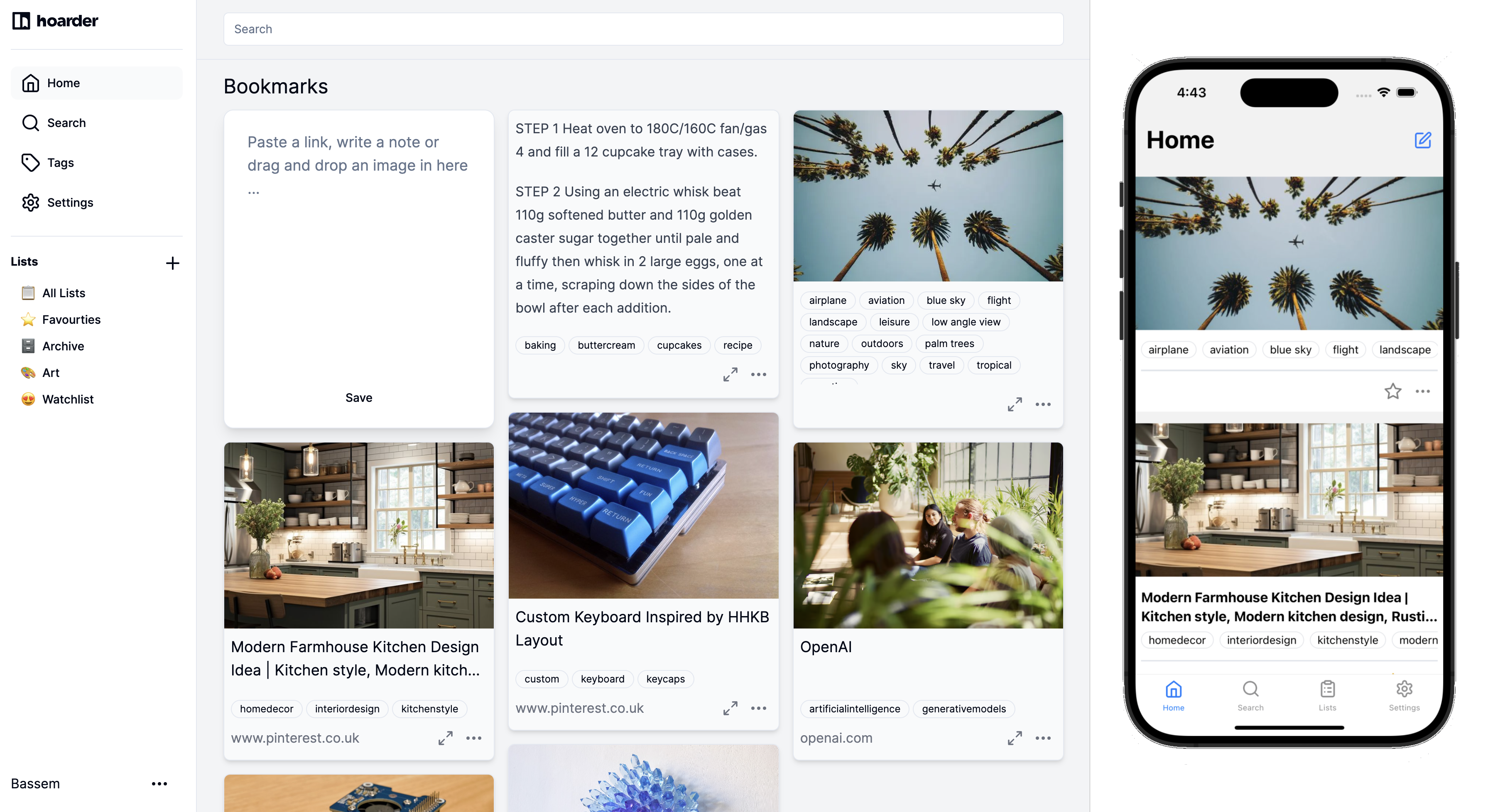

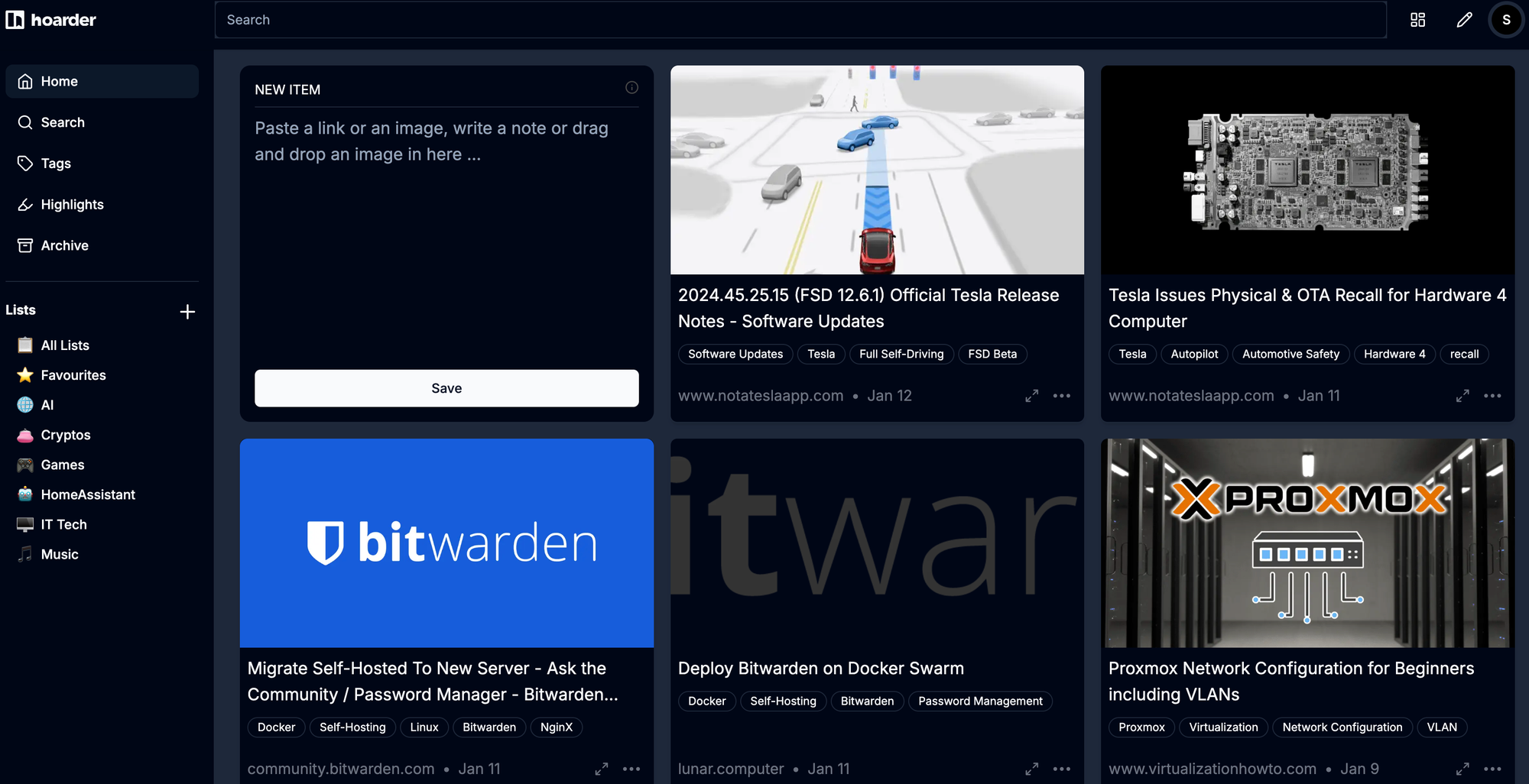

Hoarder is a self hosted bookmark everything app with a touch of AI (if you enable it) This application comes with mobile version so you can read and bookmark on the way.

Overview

The provided Docker Compose file defines three services:

- web: The main Hoarder application.

- chrome: A headless Chrome container used for browser-based tasks (like rendering or debugging).

- meilisearch: A lightweight, fast search engine to power search functionalities within Hoarder.

Each service uses a “restart unless-stopped” policy to enhance resilience. They are deployed with node role constraints that ensure they run on worker nodes in your Swarm cluster.

Persistent storage is backed by NFS volumes, ensuring that data such as Hoarder content and Meilisearch indexes remain durable across container restarts or migrations.

The Docker Compose File Breakdown

Let's examine the key components of the Compose file with sensitive details replaced by placeholders:

1. Hoarder Application (web service)

services:

web:

image: ghcr.io/hoarder-app/hoarder:latest

restart: unless-stopped

volumes:

- nfs-hoarder:/data

ports:

- 2929:3000

environment:

MEILI_ADDR: http://meilisearch:7700

BROWSER_WEB_URL: http://chrome:9222

OPENAI_API_KEY: "YOUR_OPENAI_API_KEY_HERE"

# OLLAMA_BASE_URL: http://your-ollama-instance:11434

# OLLAMA_KEEP_ALIVE: 5m

# INFERENCE_TEXT_MODEL: your_inference_model_name

DATA_DIR: /data

MEILI_MASTER_KEY: YOUR_MEILI_MASTER_KEY_HERE

NEXTAUTH_URL: http://0.0.0.0:3000

NEXTAUTH_SECRET: YOUR_NEXTAUTH_SECRET_HERE

deploy:

placement:

constraints:

- node.role == worker

- Image: The latest version of Hoarder is pulled from the GitHub Container Registry.

- Volumes: It mounts the

nfs-hoardervolume at/datato persist application data. - Ports: The container’s port 3000 is mapped to host port 2929.

- Environment Variables:

- MEILI_ADDR and BROWSER_WEB_URL point to the Meilisearch and Chrome services, respectively.

- OPENAI_API_KEY, MEILI_MASTER_KEY, and NEXTAUTH_SECRET are represented by placeholders. In production, consider using Docker secrets or a secure environment variable management system.

- Deployment Constraints: The service is restricted to run on Docker Swarm worker nodes.

2. Headless Chrome (chrome service)

chrome:

image: gcr.io/zenika-hub/alpine-chrome:123

restart: unless-stopped

command:

- --no-sandbox

- --disable-gpu

- --disable-dev-shm-usage

- --remote-debugging-address=0.0.0.0

- --remote-debugging-port=9222

- --hide-scrollbars

deploy:

placement:

constraints:

- node.role == worker

- Image: Uses a lightweight Alpine-based Chrome image.

- Commands: The custom command-line flags disable sandboxing, GPU acceleration, and shared memory usage—key adjustments for running Chrome in a containerized, headless environment.

- Deployment Constraints: Also limited to worker nodes.

3. Meilisearch (meilisearch service)

meilisearch:

image: getmeili/meilisearch:v1.11.1

restart: unless-stopped

environment:

MEILI_NO_ANALYTICS: "true"

DATA_DIR: /data

MEILI_ADDR: http://0.0.0.0:7700

MEILI_MASTER_KEY: YOUR_MEILI_MASTER_KEY_HERE

NEXTAUTH_URL: http://0.0.0.0:3000

NEXTAUTH_SECRET: YOUR_NEXTAUTH_SECRET_HERE

volumes:

- nfs-meilisearch:/meili_data

deploy:

placement:

constraints:

- node.role == worker

- Image: Leverages Meilisearch’s official Docker image for an efficient search service.

- Environment Variables: Disables analytics and configures access via a master key. Sensitive keys have been replaced by placeholders.

- Volumes: Uses the

nfs-meilisearchvolume to persist search indexes. - Deployment Constraints: Runs on worker nodes.

NFS Volumes for Persistent Storage

Persistent storage is crucial for stateful applications. This setup defines two NFS volumes:

volumes:

nfs-hoarder:

driver: local

driver_opts:

device: :/path/to/your/storage/hoarder

o: addr=YOUR_NFS_SERVER_IP,nolock,soft,rw,nfsvers=4

type: nfs

nfs-meilisearch:

driver: local

driver_opts:

device: :/path/to/your/storage/meilisearch

o: addr=YOUR_NFS_SERVER_IP,nolock,soft,rw,nfsvers=4

type: nfs

- nfs-hoarder: Provides a dedicated storage space for Hoarder’s data.

- nfs-meilisearch: Ensures search indexes persist across container rebuilds and crashes.

- Driver Options: Both volumes utilize NFS v4 with specific options (

nolock,soft,rw) to enable remote file system reliability and performance. ReplaceYOUR_NFS_SERVER_IPwith the actual IP address of your NFS server.

Docker Swarm Considerations

By deploying with Docker Swarm:

- Scalability: The services can be scaled horizontally across multiple nodes.

- Resilience: Swarm's scheduler ensures services are re-launched in case of node failures.

- Constraints: The

node.role == workerconstraints help distribute workload appropriately by ensuring that none of these services run on manager nodes (which ideally handle orchestration tasks).

This Docker Compose configuration demonstrates a robust approach to deploying a complex application stack in a Docker Swarm environment. By combining Hoarder, headless Chrome for browser automation, and Meilisearch for search functionalities—underpinned by persistent NFS volumes—you can achieve a highly available and scalable solution.

Key Takeaways:

- Use Docker Swarm for high availability and seamless service recovery.

- Employ NFS volumes to maintain persistent application data across service restarts.

- Keep environment configurations flexible and substitute sensitive information with secure placeholders, managing secrets appropriately in production.

Deploying this setup in your cluster will give you a resilient and scalable foundation, paving the way for further customization and improvement as your application grows.

Feel free to adjust any of the placeholders (like YOUR_OPENAI_API_KEY_HERE, YOUR_MEILI_MASTER_KEY_HERE, YOUR_NEXTAUTH_SECRET_HERE, and YOUR_NFS_SERVER_IP) with your actual values using proper secret management strategies.