The world of enterprise networking is vast and technically dense. From BGP path selection and NAT policies to VLAN tagging and firewall rules, the knowledge required to navigate these systems effectively is significant. Traditionally, engineers relied on vendor documentation, forums, and decades of personal experience. But now, with the rise of powerful open-source language models, there’s a new way to encapsulate this expertise into a tool that’s fast, private, and deeply knowledgeable.

This project explores how I fine-tuned Meta’s LLaMA 3.2, the latest iteration of the LLaMA series, to serve as a domain-specific assistant for network engineers. The result is a fully self-hosted system capable of answering configuration questions, explaining protocols, reviewing CLI syntax, and even assisting in troubleshooting workflows.

To train the model, I leveraged not only public networking documentation and RFCs, but also my company's internal knowledge base of real-world NetScaler troubleshooting cases and solutions. This provided rich, field-tested examples and allowed the assistant to go far beyond surface-level understanding.

Why Fine-Tune a Language Model for Networking?

While large models like GPT-4 or Claude can answer general questions, they often struggle with the specificity and depth of technical domains. Network engineering, in particular, involves vendor-specific syntax, uncommon acronyms, and layered concepts that go far beyond what general-purpose models have seen during pretraining.

By fine-tuning LLaMA 3.2, which brings improved reasoning and instruction-following capabilities compared to previous LLaMA versions, I created a model that understands the subtleties of routing loops, OSPF area design, NAT traversal, BGP timers, and especially NetScaler concepts like content switching, responder policies, or SSL offloading.

Thanks to our internal corpus of real troubleshooting workflows, the model doesn’t just know how the technology is supposed to work. It also knows how it fails in practice, and how to fix it.

Turning Documentation and Internal Knowledge into a Training Set

I started by converting dense networking documentation into high-quality instruction-following examples. Using Python scripts, I parsed RFCs, configuration guides, and internal training material. Most importantly, I integrated our internal support database of solved NetScaler issues, field notes, and engineer playbooks.

The extracted content was then transformed into a structured format. Each training sample follows a simple instruction-output format. For example, the model might be prompted with:

“How do I troubleshoot HTTP 502 errors on a NetScaler load balancer?”

and it would return a step-by-step diagnosis, including key CLI commands, policy inspection steps, and real mitigation strategies.

The final dataset was carefully cleaned and validated, ensuring that the model was exposed to realistic cases and actual production scenario just textbook examples.

Training the Network Expert Model with LLaMA 3.2

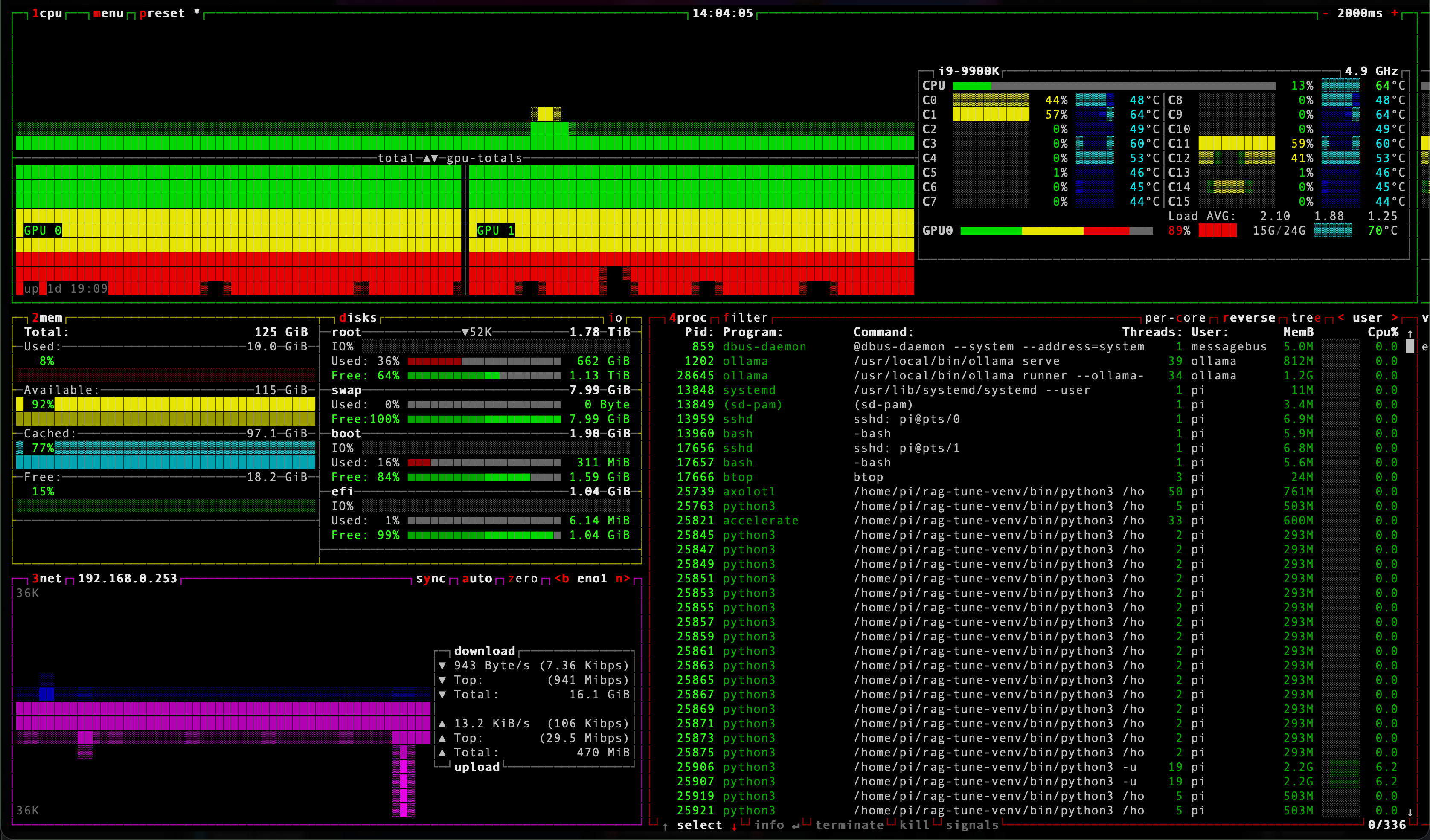

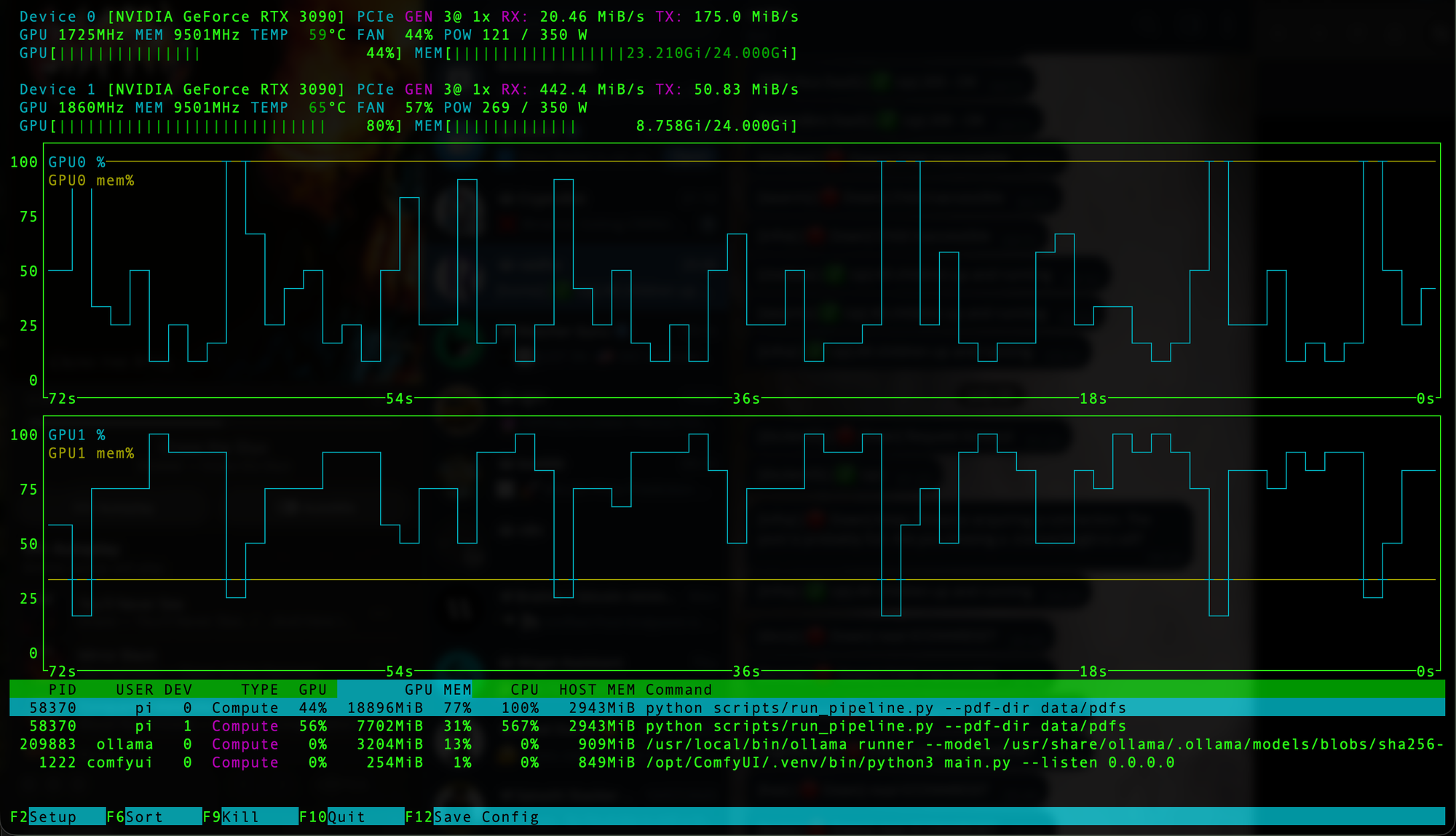

The training process uses LLaMA 3.2, typically the 8B or 32B instruction-tuned variant. To make the process efficient and accessible, I applied LoRA (Low-Rank Adaptation) with the QLoRA optimization technique. This allowed training to proceed on a dual GPU setup, even for large models, using mixed precision and gradient checkpointing to reduce memory usage.

All parameters and hyperparameters are defined in a YAML configuration file. This includes the base model path, dataset location, learning rate, number of epochs, batch size, and LoRA adapter settings. The training script orchestrates the full pipeline from tokenizer preparation to saving the adapted weights.

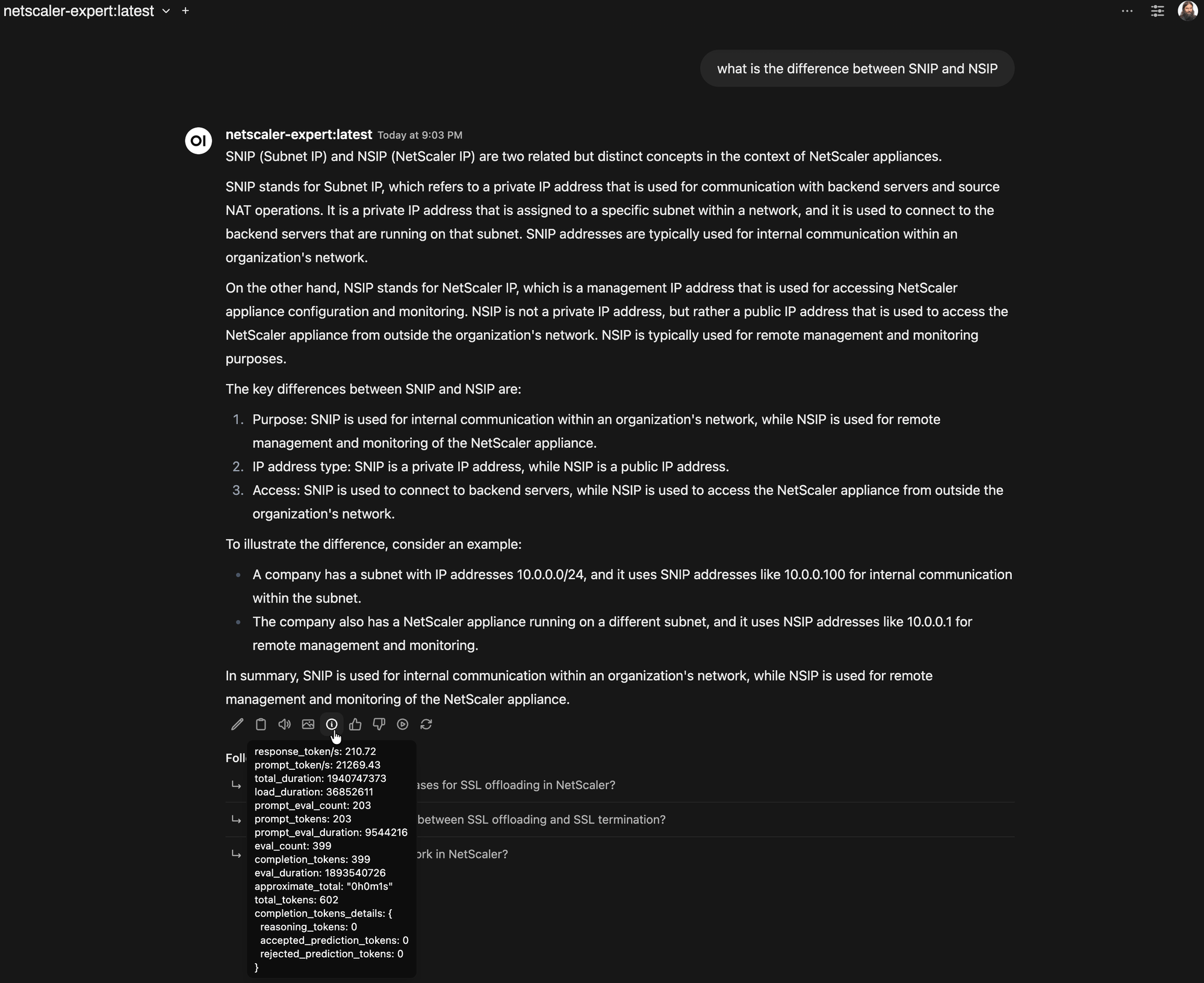

Once trained, the model behaves like a genuine subject matter expert. It recognizes network topologies, interprets configuration patterns, and provides command-line examples in a style consistent with actual enterprise deployments with a strong specialization in NetScaler topics.

Evaluating the Model's Understanding

To verify the quality of the model, I used both automated metrics and manual prompt-based testing. Quantitatively, I measured perplexity and F1 scores against a held-out set of questions. But the more telling results came from real-world prompts.

For instance, when asked how to troubleshoot asymmetric routing in a dual-WAN setup, the model suggested examining NAT rules, routing tables, and using policy-based routing to steer return traffic appropriately. In another test, it correctly explained the steps to migrate from Cisco ASA to a FortiGate appliance while preserving NAT behavior and ACL logic.

Most impressively, when given anonymized NetScaler ticket descriptions from our support history, the model was able to replicate the resolution path that engineers had documented indicating real learning from real cases.

Model Deployment and Integration

After fine-tuning, the model was converted into GGUF format to be compatible with tools like Ollama and llama.cpp. This conversion makes the model easily deployable on local systems, air-gapped environments, or Docker containers.

The result is a fast, self-hosted inference engine that can be used through a web UI, a CLI chatbot, or even a voice-based interface when paired with a speech recognition frontend.

To streamline the entire lifecycle, we also defined a CI/CD workflow in GitLab. This includes jobs for dependency installation, training, artifact packaging, and model deployment preparation. The pipeline can be triggered automatically when new documents or datasets are added, allowing for continuous improvement of the assistant.

Real-World Use Cases

The model has already been applied in several practical scenarios. In troubleshooting contexts, it can guide engineers through packet flow debugging or assist in identifying misconfigured ACLs. During network migrations, it can recommend best practices based on the specific vendors involved. It can also serve as a configuration reviewer, taking a snippet of CLI and pointing out potential mistakes.

For NetScaler specifically, the model has proven invaluable. It can walk through the setup of a content switching virtual server with SNI support, suggest policy expression corrections, and help junior engineers troubleshoot SSL handshake failures in minutes rather than hours.

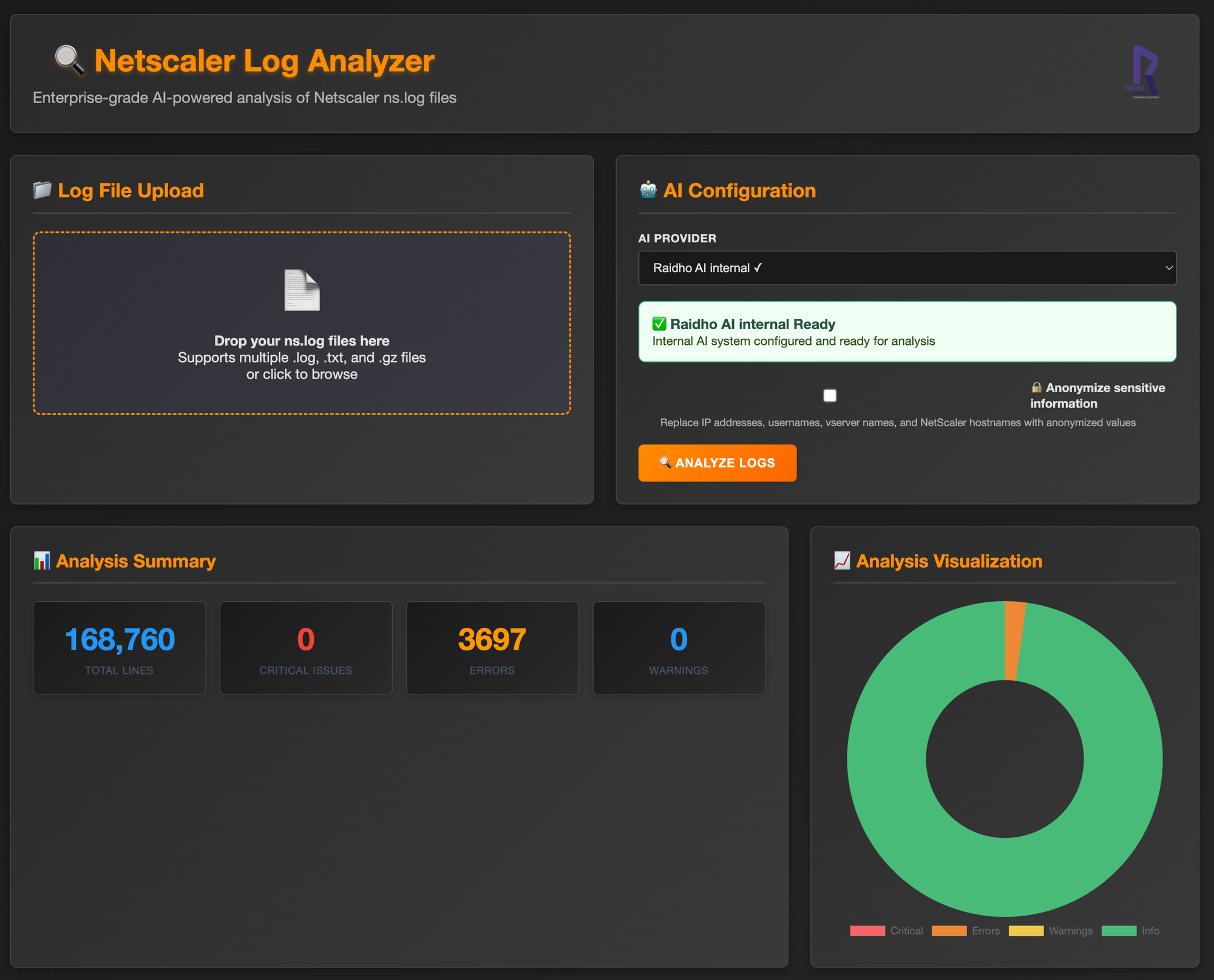

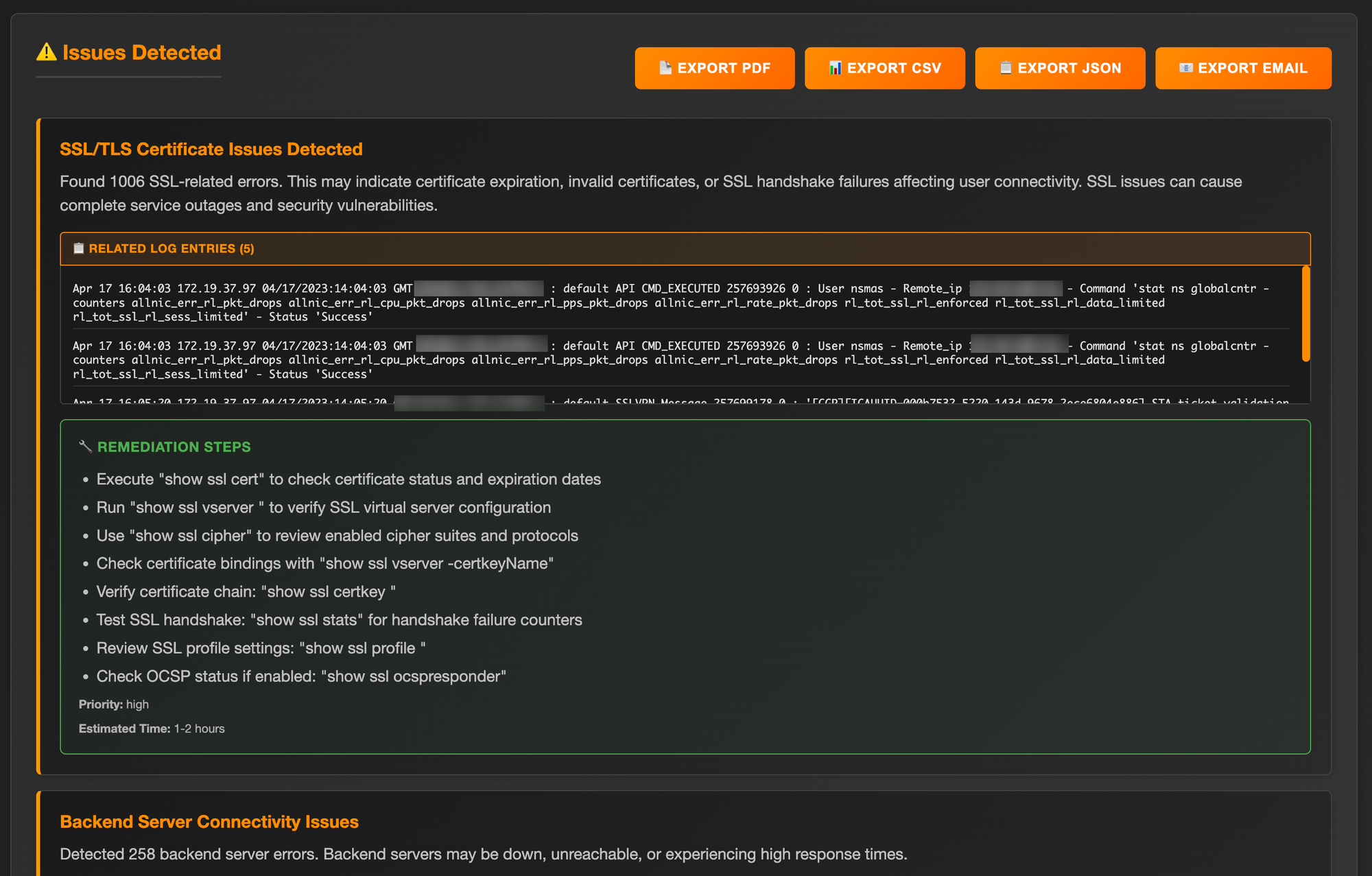

To push the concept further, I built a web interface where NetScaler log files can simply be dropped. Behind the scenes, the model parses and analyzes the logs, then generates a comprehensive action plan. It highlights key issues, recommends remediation steps, and even estimates the time required to perform each task. This turns reactive debugging into proactive resolution powered entirely by AI.

I verified each log I submitted over the past several months, and the analysis has been very reliable especially since I fine-tuned this dedicated LLM on our internal troubleshooting cases. It now helps me go much faster when parsing NetScaler logs and identifying root causes. It’s like having a 24/7 expert assistant by my side always ready to ingest and digest logs (:

Wow.

This project demonstrates how fine-tuning open-source models like LLaMA 3.2 can yield powerful, specialized assistants for technical domains. For network engineers, it creates a new kind of partner, one that understands the tools, the language, and the complexity of the job.

By training on real support cases and troubleshooting data from my company’s NetScaler deployments, the assistant has become much more than a summarizer. It is a field-tested expert that thinks the way a real engineer would.

As enterprise environments continue to evolve, domain-specific AI models will play an essential role in scaling expertise, accelerating troubleshooting, and ensuring resilience. The Network Expert LLM is one example of what's possible when modern AI meets real operational knowledge.

I have so many more ideas…