As mentioned on a previous blog post, I need to get rid of all the different Docker container hosted on several virtual machines to simplify the management and the high availability of it, not just relying on the hypervisor HA for instance.

There are 4 topics to address to fullfil this kind of migration, and I will use this blog (www.archy.net) which is self hosted on docker with a Ghost docker container and a MariaDB inside of it.

The target is :

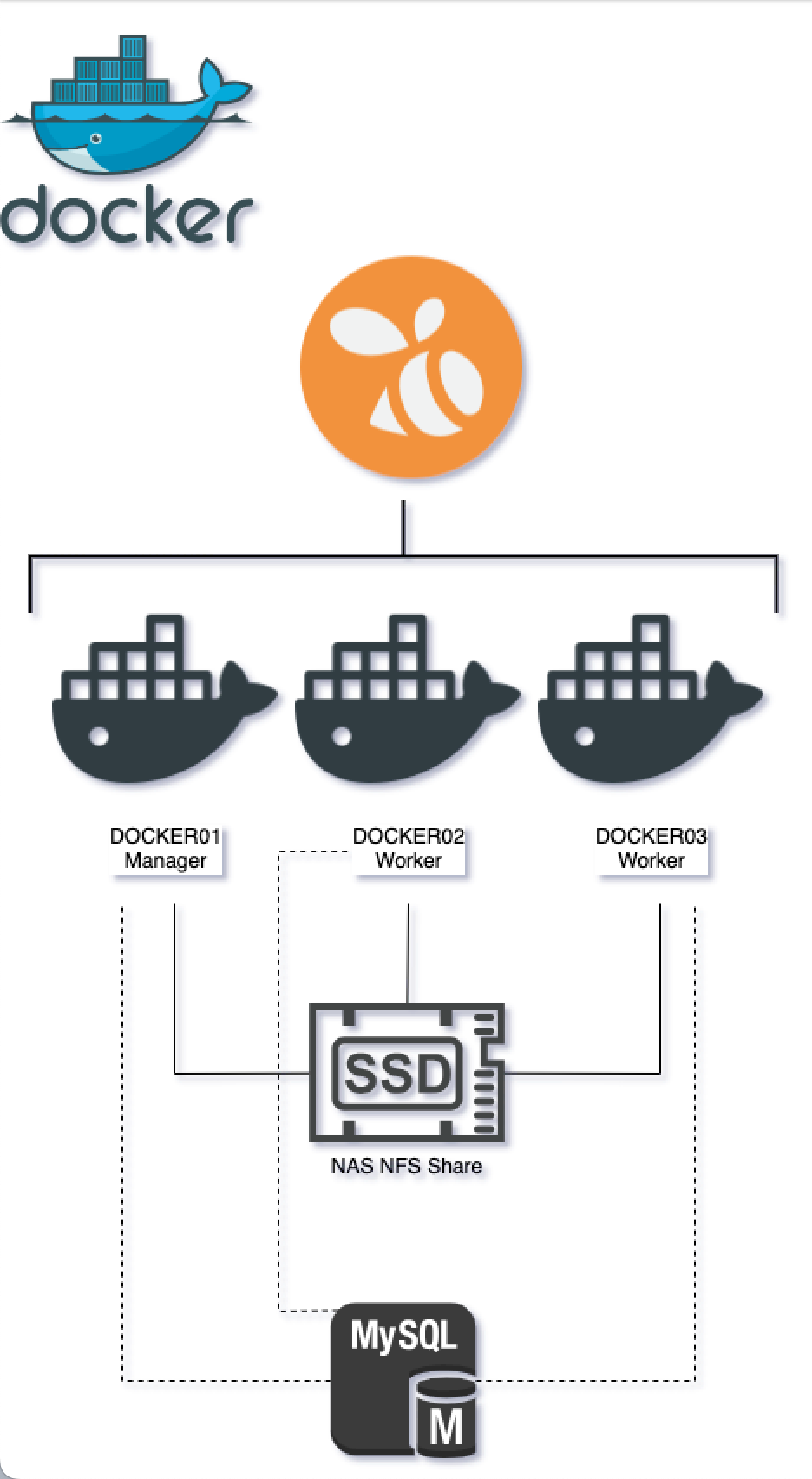

- to move the database from MariaDB to MySQL, outside of Docker

- to store all the blog data on a NFS share

- build 3 replicas of this instance to load blance it

Storage

First thing, and thank to Andrew Morgan to propulse me to the right direction, I will use a NFS share hosted on my NAS to host some of the Docker container volumes and being able to host several instances of a container with a central file system.

NFS (Network File System) is a protocol that allows a client to access files over a network as if they were stored locally on the client machine. It works by exporting a directory or file system from a server, which can then be mounted as a network drive on one or more client machines. NFS provides transparent file sharing across a network and supports various features for improved performance and multi-user environments. However, it has some limitations and security concerns that require proper configuration and management.

My NFS volume is hosted on this machine : 192.168.0.66 and the root path is /volume2/swarmnfs/

On the machines that are in the Swarm, we need to install the NFS client

sudo apt update && apt upgrade -y

sudo apt install nfs-commonMySQL

I wanted to build a MySQL cluster or another kind of database supported by my blog platform, MariaDB etc.. But after reading few articles here and there, and this one about MySQL benchmarks between NFS and local storage, I will change my mind about containerising the database part and keep it to applications.

Docker overlay network

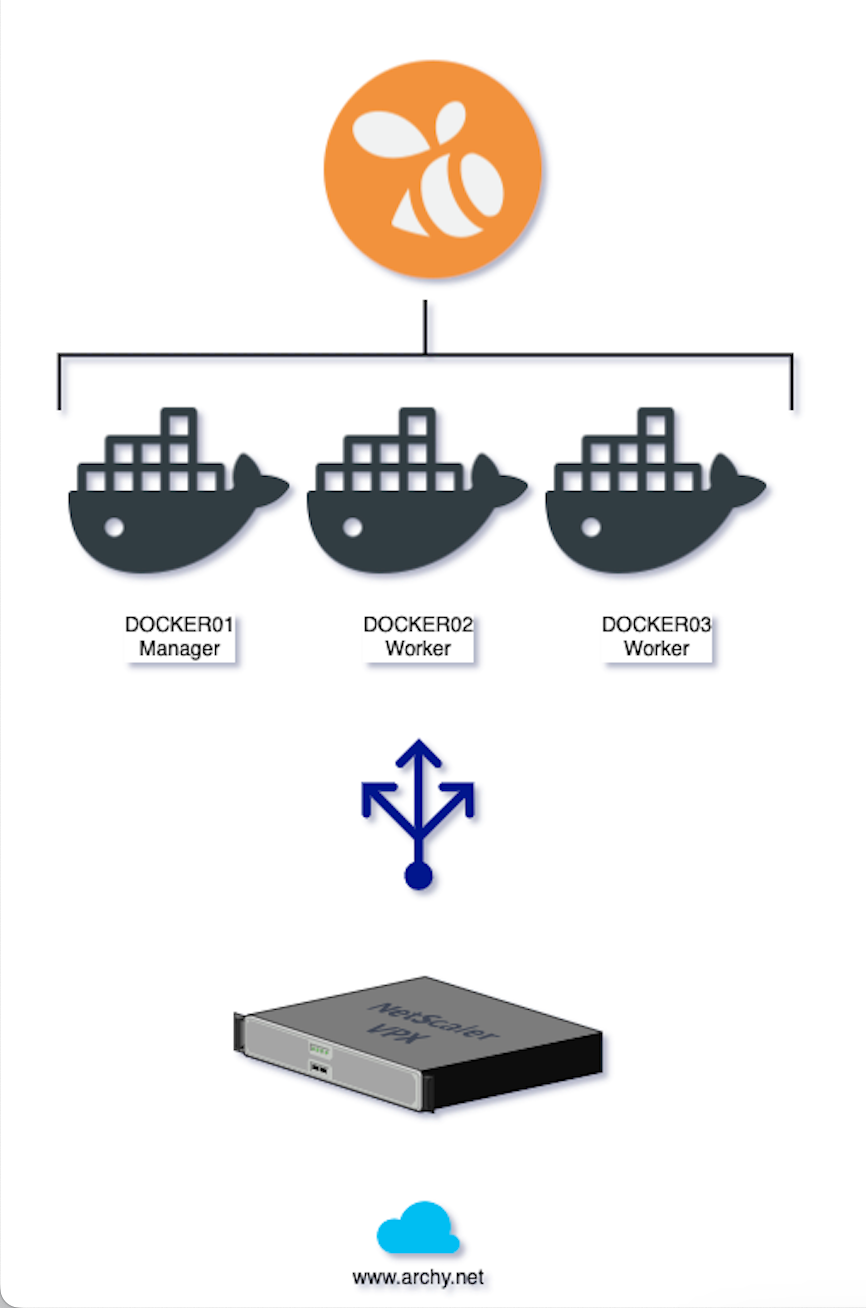

On the network side, as I will have multiple Ghost instances, this requires the use of the network driver overlay (Network Type)

A network driver overlay is a type of Docker networking plugin that creates a virtual network across multiple Docker hosts or swarm nodes, allowing containers on different hosts to communicate using private IP addresses. It uses VXLAN technology and provides automatic service discovery and load balancing. The overlay driver is designed for use in multi-host environments and requires additional configuration and management.

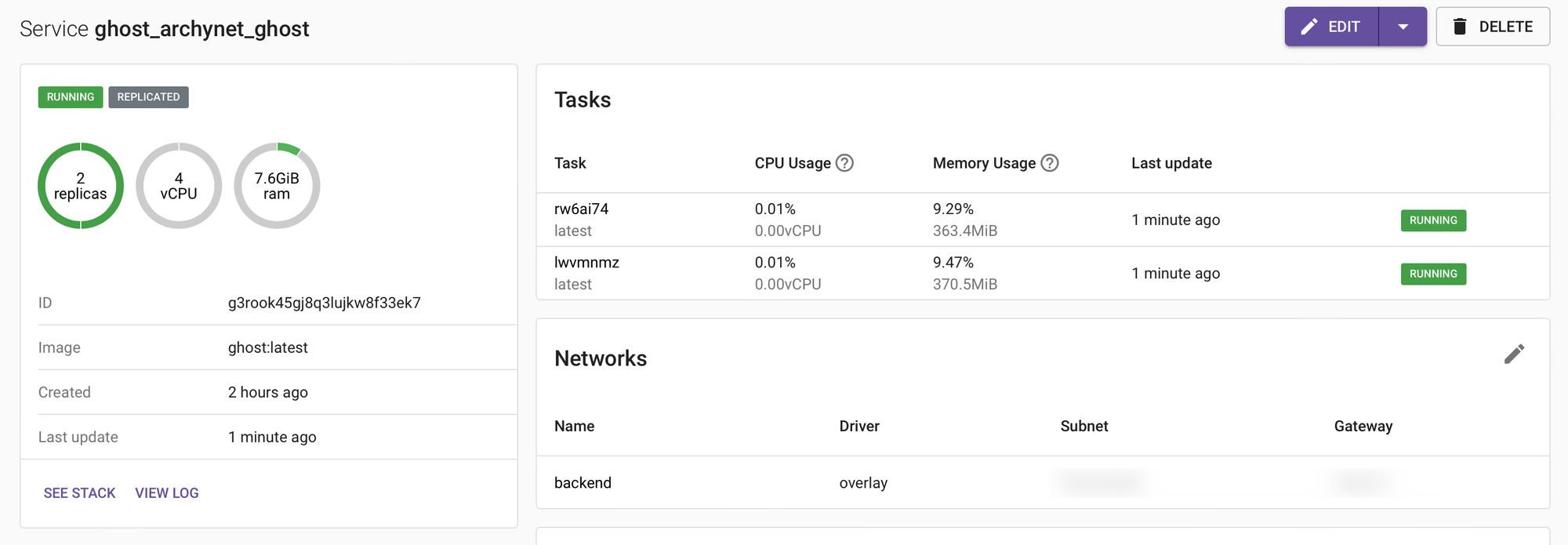

Ghost

I got the files from the source Docker Container and I simply copied it to the NFS share in a folder dedicated for this website

Here is my docker-compose file, ready to be copy and paste to swarmpit. If you don't know what is swarmpit, check here :

Here is the yml file

version: '3.3'

services:

ghost:

image: ghost:latest

environment:

database__client: mysql

database__connection__database: ghostdb

database__connection__host: mysql.domain.local

database__connection__password: 'complexpassw0rd123'

database__connection__user: mysqluserx

url: http://www.archy.net

ports:

- 2368:2368

volumes:

- nfs-archynet-ghost:/var/lib/ghost/content

networks:

- backend

logging:

driver: json-file

deploy:

replicas: 3

networks:

backend:

external: true

volumes:

nfs-archynet-ghost:

driver: local

driver_opts:

device: :/volume2/swarmnfs/gpathtoghost/blog/content

o: addr=192.168.0.66,nolock,soft,rw,nfsvers=4

type: nfs

Once tihs is deployed, 3 replicas will be available with the option

deploy:

replicas: 3Here is a schema of what had been build Docker Swarm wise

And then on the network side with a Load Balancer infant of the 3 dockers instances :

In the ideal work I would leave the Manager alone without hosting a Ghost stack on it. I removed it in the end to leave the Ghost stack only on the two workers

Let's see how it works in the long run.